The Curse of Ambiguous Metadata Fields

I work in the Getty Museum’s Collection Information & Access (CI&A) Department. I create metadata in our collection information database for new photography of artworks so digital images are available for Open Content (rights-free, high-res downloads), internal use, mobile apps, and everything in between.

Matt wrote about the challenges of incomplete or ambiguous data. This can make research difficult—but it also applies to making information accessible in the first place. In the past, the metadata we tracked for images sometimes had multiple meanings. This, coupled with legacy software dependencies and workflows, caused us to reevaluate how we were tracking and using our data. For example, we didn’t have a way to say an image was copyright-cleared but was not quality-approved for publish (or vice versa). And what happens when copyright data changes?

Manually updating such fields was quite time-consuming and prone to error. It wasn’t until the Open Content Program began that we in CI&A took a long hard look at all the variables and determined we needed to separate the information, and translate business rules into algorithms for programmatically determining usage clearance.

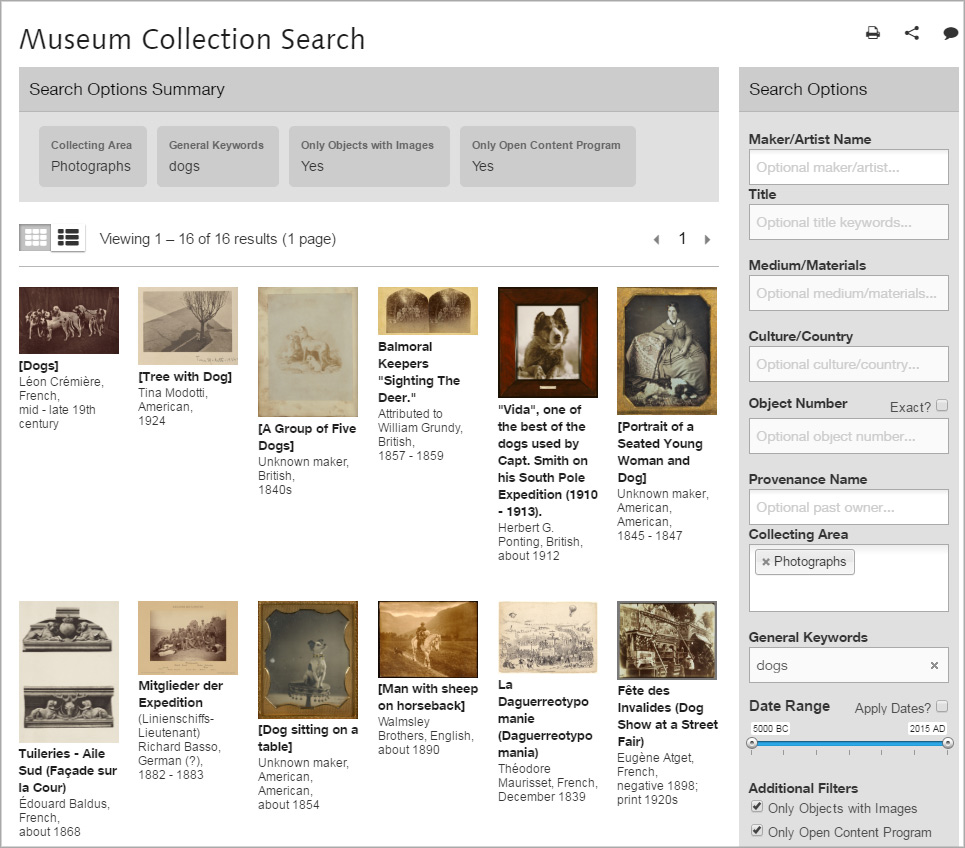

In 2012 our department began evaluating our data in preparation for launching the Open Content Program. We needed a way to efficiently and accurately release images for download based on several criteria. Ultimately we separated out three filters that were previously intertwined: Rights, Image Quality, and Approval for Public Access. Because these fields are separate, they can operate individually, allowing for more granular permissions and access. Obviously we want to default as much as possible to Open Content, making exceptions for images of insufficient quality or that we aren’t ready to show.

Rights are managed by the Registrar’s Office and include copyright, lender or artist agreements, privacy restrictions, etc. Object rights are managed at the object level in the database, not to be replicated and maintained in the media level of the database. Any media-specific copyright, used infrequently for images not made in-house, can be added at media level. For example, an image supplied by a vendor for a new acquisition might be published online before the object can be scheduled for photography in our studios. While the object is cleared for Open Content download, the vendor image would not be cleared for download and the metadata would reflect this. Within the media metadata, there is a field that denotes image quality and another that allows for approval by curators of what gets published to the online collection pages.

The Imaging Services team determines Image Quality at the media level. Most born-digital images are approved, but the older photography gets a closer look. Another example would be that recent high-quality studio photography would be published online, but iPhone snapshots in a storeroom would not.

Curatorial and conservation departments give Approval for Public Access, deciding which views should represent their objects online, also at the media level. This might include allowing preferred representations, restricting technical views and conservation work in progress.

Another challenge in making images accessible is creating relevancy in the digital image repository used by Getty staff. We need a consistent way to use data to identify what’s relevant, and that varies per user. When searching for images of an object in this repository, there are often several pages of image thumbnails to sift through. The faceted search is great, but only gets us so far. How do staff know which image is the most recent/best/preferred? Is there a way to demote older, less desirable images so that choosing the right image for a press release or blog post is easier?

We want older photography to still be available—for curators and conservators it’s a valuable record for condition reports, conservation, and study. But we don’t want the new, beautiful photography missed because it’s hard to find; such images are highly relevant for communications or publications staff. Imaging Services would be best at filtering images for various purposes—so we need a data field that reflects this. It may or may not fall under the Image Quality bucket, but we’re still undecided as of now.

The thing I’m most concerned with is doubling up meaning on a single metadata field. We’ve been down that road before! We already use the Image Quality field to describe if something is born digital or needs a closer review. If we include a qualifier for “most recent/preferred,” will that get tangled with other fields or alternate interpretations in the future? Adding a new image should not require an audit of all known media. Is this something that can be programmed into our workflow to update automatically?

The thing I’m most concerned with is doubling up meaning on a single metadata field. We’ve been down that road before! We already use the Image Quality field to describe if something is born digital or needs a closer review. If we include a qualifier for “most recent/preferred,” will that get tangled with other fields or alternate interpretations in the future? Adding a new image should not require an audit of all known media. Is this something that can be programmed into our workflow to update automatically?

This refinement of data-driven image access is an ongoing collaboration between my department and the Getty’s ITS department. The end goal is to make our images easily accessible through Open Content, internal use, or otherwise. That can only be achieved through clean and meaningful metadata. The definitions of fields and values should as unambiguous as possible, which is hard to do with so many stakeholders and intended uses!

—Krystal Boehlert, Metadata Specialist, Collection Information & Access

Blog originally published November 28, 2016 on The Getty’s The Iris for the #MetadataMonday series, Metadata Specialists Share Their Challenges, Defeats, and Triumphs under a CC-BY license.

Tagged with: metadata, museum, technology

Category: Museums | Technology

Write a Reply or Comment

You must be logged in to post a comment.